Most content of this blog is from the book Site Reliability Engineering, it can be seen as the note of what I have learned from the content of book.

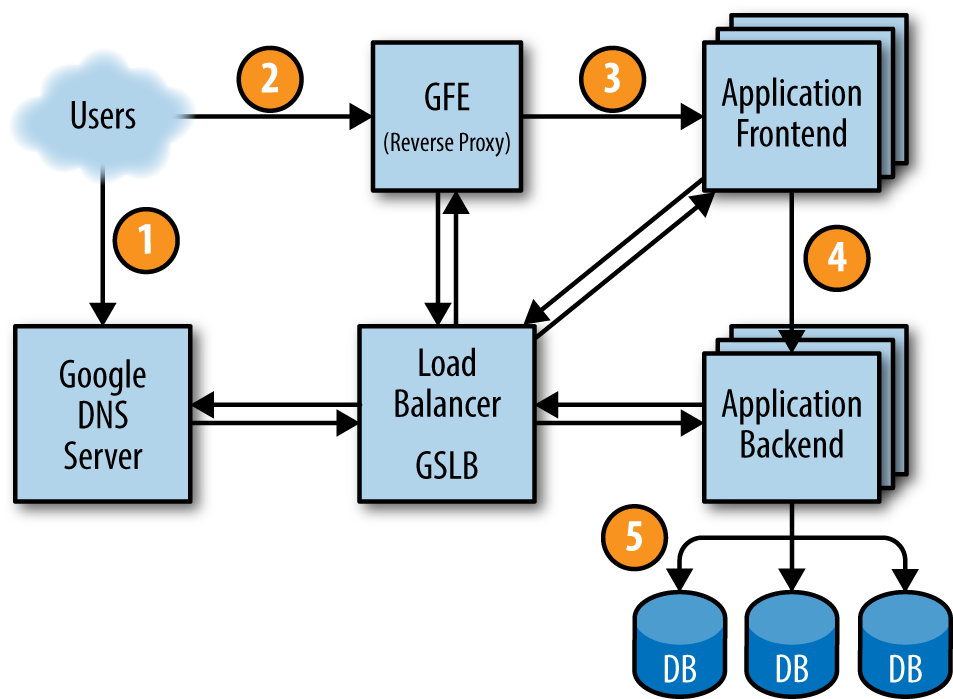

When we enter the address of google.com and press the enter, the browser first query the local cache of DNS of google. If it can’f find any matched entry, the browser send query to a Google DNS server and here is what our story begins.

Load Balance

First Balance Trial: DNS

The browser soon received multiple IPs of where to find the google.com, which can be used by browser to query one by one, i.e. simple round robin load balance. But this behavior is not so ideal – all front end data center will have roughly equal amount of traffic. For users in different locations, some data center may be better then others in the sense of distance, latency. In theory, the SRV records can be used to solve the problem, but, unfortunately, it is not supported by HTTP yet.

Another question is how DNS server know which IP is better for user? Anycast is a way partly solve the problem. Multiple DNS server has the same IP address, and the client’s dns query will be routed to relative closer server.

Due to the implementations of DNS: end user rarely query authoritative servers directly. There exists some middle man which act as cache of authoritative servers. If the middle man didn’t have the dns entry, it will query recursively to the authoritative servers. This way save the time of query, but make load balance harder.

What Recursive Cause?

It make authoritative servers blind because authoritative servers receive the query from middle man but not from end user, which means no user’s IP. One possible solution is to use EDNS0 protocol which include the initial user’s subnet info.

Closer is Enough?

No, it’s not. DNS also need to take the traffic & state of each data center into account, otherwise user may be directed to

a data center experiencing network problems.

Flush Cache?

After some time, authoritative servers may find a better data center for end user, but the middle man still hold the old entry for query. Sadly, the DNS has no mechanism to flush the middle man’s cache, except to set a relative short TTL for entry.

Second Trial: VIP – Balance in OSI Network Layer

Finally, the real query for content of google.com is sent to some data center and received by network load balancer, which can a gateway or reverse proxy. The IP of this query’s target/destination is shared by multiple devices, not bind to a single network interface, which is called virtual IP, i.e. VIP.

The next job is to choose a front end server to accept the query. But choose which? In theory, we can choose the server have least load, which make load more balanced. Even if we can know which server has less load, things is still not so easy.

Stateful

When dealing with stateful request, we need to make sure all the packets of same request (i.e. multiple packets in a single TCP connection) are sent to correct frontend server, otherwise state is break down.

There exists two ways to handle this problem:

- The load balancer keep track of all the connections it redirected: Obviously, when using this method, the pressure of load balancer is very large and the max connection number is limited;

- Use information in packet to identify which packets are from same connection: this can be done using techniques like hash function

id(packet) % N // id is a function produce a connection id // N is the number of frontend server

But stories is not ended. What to do when a front end server is down? The calculation become id % (N-1), which means almost all requests will be directed to different frontend server (If they don’t share any state between each other, it often means user lost their state and connection is reset).

Fortunately, there exists some algorithm to rescue: consistent hashing. This algorithms map each object to a point of circle, and some buckets representing a available machine scatter evenly on circle. When a machine is down, only the requests it is handling will mapping to next bucket, others will not be affected.

And in real application, google adopt the hybrid solution: when traffic is low, using simple connection tracking and fall back to consistent hashing when pressure is high.

Now we know we should send the message to n-th frontend server, but how? Considering we are doing load balancing on the layer there – Network Layer of OSI network model, we can do in the following three methods:

- NAT: network address translation, like what normally router does. But this ask for track for all connections when doing this kind of mapping;

- DSR (direct server response): update the address from VIP’s MAC to the target frontend server’s MAC. This technique also has obvious defect, all the machine have to be in same subnet, which limit the number of machines;

- Packet encapsulation: Load balancer can put the forwarded packet into another IP packet with Generic Routing Encapsulation (GRE). Wrapping the old packet is also not free, which add much size, may leading to the fragment of IP packet. So google increase the MTU in data center to avoid this kind of problems.

As you can see from the following illustration, we have just reached the Application Frontend, and where we really enter the data center, what is going on in there? We will continue in next posts.

Written with StackEdit.

评论

发表评论